Assessment, Inventory and Monitoring Desk Guide

The BLM National AIM (NAIM) Team provides and maintains this Desk Guide for use by BLM AIM State Leads, Monitoring Coordinators, Data Analysts, and other Program Leads (collectively referred to as “State Office AIM Team”), as well as AIM Project Leads, and other AIM practitioners involved in the process of data collection, data use, and management of public lands. Public and internal versions of this Desk Guide are provided on the AIM Website. The public version is intended to share information about AIM but internal links have been removed (denoted by grey highlighted text) associated with working files. The internal version is provided with live links to internal BLM working files, network directories, and SharePoint sites.

This Desk Guide describes the process to implement the six principles of the AIM Strategy by providing guidance on how to initiate and plan a monitoring project, how to create a monitoring design, how to perform data collection and management, and lastly suggests a standard workflow for data use. Implementing an AIM project is an iterative process and involves four main phases: planning and project initiation, design, data collection, and analysis and reporting. Completion of one phase helps to inform, and leads to, subsequent phases.

Use this website to help navigate the AIM Desk Guide Document. Each dropdown section will provide an overview of the information covered and correspond directly to the section within the document.

External Desk Guide Internal Desk Guide

The Monitoring Design Worksheet is a template used to guide and document objectives to develop successful monitoring efforts. Follow the steps in the Desk Guide section 4.0 Design to complete this Template. A Monitoring Design Worksheet Example is provided.

Monitoring Design Worksheet TEMPLATE Monitoring Design Worksheet EXAMPLE

Desk Guide Topics

- Planning and Project Initiation

-

Planning and implementing an AIM project can be simple or complex depending on the needs and scale of the monitoring effort. This section discusses the five basic steps to plan and implement an AIM project and identifies specific people who will be involved and their roles and responsibilities in the process. Many offices may have multiple ongoing AIM efforts across different resources (terrestrial, lotic, and/or riparian and wetland) and different scales (Land Use Planning, allotment, or treatment scale). Offices wanting to implement an AIM project should learn about what monitoring has already occurred in their local field office or district office to provide context for planning continued monitoring efforts.

See Chapter 3 in the AIM Desk Guide for more information.

- Design

-

When starting a new or reviewing an existing AIM sample design, consult with your IDT to identify specific management questions and goals of interest. Once these questions are identified, an appropriate monitoring plan can be developed to make necessary technical decisions using an AIM sample design. AIM encourages the use of appropriate sample designs for the management questions at hand. For example, restoration questions may require a Before–After - Control–Impact approach, LUP/RMP evaluations often require a random design, and a grazing specific question might be approached with a Key Area/Designated Monitoring Area. The most common AIM sample designs are a combination of random and targeted sample points. Spatially balanced random designs are a random draw of points or stream reaches on the landscape intended to report conditions across the landscape. Targeted designs are points that are manually selected to ensure data is collected at a very specific non-random location. A random design is usually drawn by the National AIM Team (see Step 5: Select Monitoring Locations, Tools to Select Points), and targeted points are usually selected by the Project Lead. The development of a random, targeted, or combination designs should be driven by monitoring objectives and documented carefully through the Monitoring Design Worksheet (MDW). The National AIM Team is available to provide guidance and support for deciding and implementing any appropriate sample design.

The process of designing a monitoring and assessment effort can be broken down into a series of steps. This design section lists the steps in the order that they are normally completed, but there is no “single” way to design a monitoring program; the steps should be viewed as an iterative process. As an IDT work through steps in the design process, decisions made earlier in the process may require modification. By completing the MDW during the design process, the IDT can ensure the completeness of the design. A link to the MDW template can be found in §4.2 Tools in the Desk Guide document.

Each step in the design process is tied to a step in the MDW. Once a MDW is drafted, the Project Lead should coordinate with the appropriate State Lead and National AIM Team to review and update as necessary. Completion of the worksheet is an iterative process, and it can be revised and updated throughout the life cycle of an AIM project. To request further assistance, contact the appropriate resource personnel at the BLM National Operations Center.

See Chapter 4 in the AIM Desk Guide for more information.

- Data Collection and Management

-

There are four main steps to data collection which include preparation, field methods trainings, data collection, and data ingestion. These steps include details about AIM crew hiring, field methods training, evaluation and rejection of points, etc. Each of which has important associated quality assurance (QA) and quality control (QC) procedures. The State Office AIM Team, AIM Project Leads, resource specialists, other field office staff, data collectors, and National AIM Team members are a critical part of the data collection process. Whether or not they are collecting data, everyone has a role to play in data quality.

The AIM principles include QA and QC for each step of the data collection process, ensuring quality data that practitioners can use with confidence. Quality assurance and quality control are the responsibility of all participants and occur throughout the data collection process. Quality assurance is a proactive process intended to minimize the occurrence of errors and includes strategies like standardized required trainings, data collector calibration, and electronic data capture tools with built-in data rules and documentation. Quality control is a retrospective process which identifies errors after data have been collected. The ability to correct errors during the QC process is limited because points cannot be revisited with the exact conditions that occurred during the original data collection event. Quality control tools include error review scripts and dashboards, data review dashboards and manual review by data collectors, AIM Project Leads, the State Office AIM Team, and/or the National AIM Team Data Managers.

See Chapter 5 in the AIM Desk Guide for more information.

- Applying AIM Data: Analysis and Reporting

-

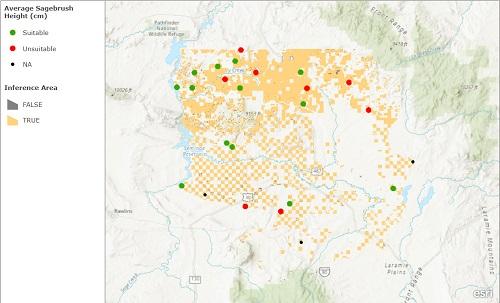

There are numerous ways that AIM data can be used to inform land management and land management decisions. This section presents a standardized workflow to address the most common ways to use AIM and other natural resource data. While the workflow presented here is AIM-specific, it is intentionally similar to the steps outlined in BLM Technical Note (TN) 453, which specifically focuses on land health evaluations and authorizations of permitted uses, and the workflow associated with LUP effectiveness evaluations. AIM data can be analyzed for an entire sample design area as specified in a MDW or opportunistically, using the data that fall within a particular area of interest. For assistance with any of these steps, or additional analyses, please contact the State Office and National AIM Teams.

In addition to standard workflows, examples of AIM data being used to inform decision-making can be very helpful. Several current AIM data-use examples are described on the BLM AIM resources page (www.blm.gov/aim/resources), in the AIM Decision Library (internal BLM resource) or in the AIM Practitioner’s webinars (https://www.blm.gov/aim/webinars).

See Chapter 6 in the AIM Desk Guide for more information.

Appendices

- Appendix A: Roles and Responsibilities

-

This appendix defines many of the roles and responsibilities of AIM practitioners which may include the National AIM Program Lead, the National AIM Team, the State Office AIM Team, District and Field Offices, AIM crew managers and leads, and AIM data collectors.

- Appendix B: Setting Benchmarks

-

Setting benchmarks for indicators is a necessary but often challenging step in defining monitoring objectives. Benchmarks will often vary across the landscape based on natural environmental gradients, therefore variability in ecological potential should be considered when setting benchmarks. The goal is to ensure comparison among assessed sites to those with similar potential. This section steps through the process of setting benchmarks and is organized into three subsections: best professional judgement (applies throughout all benchmark decisions), policy based approaches (priority when available, but often limited), and non-policy based approaches. Within non-policy based approaches there are three general, sometimes overlapping, categories discussed in this section: determining ecological potential (i.e., predictive models and ecological site descriptions), screening monitoring data to include the range of natural conditions and exclude disturbance, and using values or ranges from peer reviewed literature.

- Appendix C: Sample Sufficiency Tables

-

The number of AIM points on a landscape is often determined by factors such as funding or available resources but the number of sample points and variability of the conditions across the landscape can impact how confident you are in your condition estimates. Sample sufficiency tables can also be used by AIM practitioners to better understand how the total sample sizes will affect the confidence intervals. These tables can be used in two ways, to logically inform your initial sample size, or to more statistically inform a new design where data already exists.

- Appendix D: Terrestrial and Lotic Master Samples

-

A master sample was created and at times used for AIM. This section documents the development, limitations, and current use of the Master Sample.